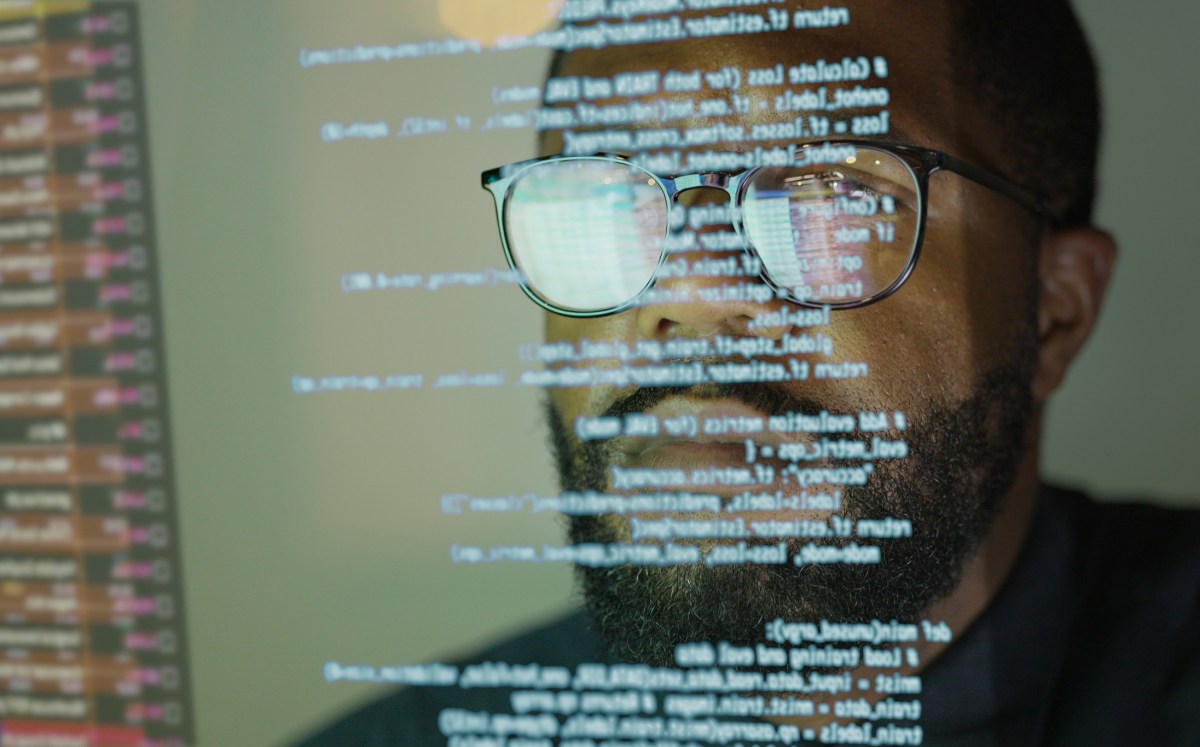

On Thursday, Windsurf, a startup that develops popular AI tools for software engineers, announced the launch of its first family of AI software engineering models, or SWE-1 for short. The startup says it trained its new family of AI models — SWE-1, SWE-1-lite, and SWE-1-mini — to be optimized for the “entire software engineering process,” not just coding.

The launch of Windsurf’s in-house AI models may come as a shock to some, given that OpenAI has reportedly closed a $3 billion deal to acquire Windsurf. However, this model launch suggests Windsurf is trying to expand beyond just developing applications to also developing the models that power them.

According to Windsurf, SWE-1, the largest and most capable AI model of the bunch, performs competitively with Claude 3.5 Sonnet, GPT-4.1, and Gemini 2.5 Pro on internal programming benchmarks. However, SWE-1 appears to fall short of frontier AI models, such as Claude 3.7 Sonnet, on software engineering tasks.

Windsurf says its SWE-1-lite and SWE-1-mini models will be available for all users on its platform, free or paid. Meanwhile, SWE-1 will only be available to paid users. Windsurf did not immediately announce pricing for its SWE-1 models but claims it’s cheaper to serve than Claude 3.5 Sonnet.

Windsurf is best known for tools that allow software engineers to write and edit code through conversations with an AI chatbot, a practice known as “vibe coding.” Other popular vibe-coding startups include Cursor, the largest in the space, as well as Lovable. Most of these startups, including Windsurf, have traditionally relied on AI models from OpenAI, Anthropic, and Google to power their applications.

In a video announcing the SWE models, comments made by Windsurf’s Head of Research, Nicholas Moy, underscore Windsurf’s newest efforts to differentiate its approach. “Today’s frontier models are optimized for coding, and they’ve made massive strides over the last couple of years,” says Moy. “But they’re not enough for us … Coding is not software engineering.”

Windsurf notes in a blog post that while other models are good at writing code, they struggle to work between multiple surfaces — as programmers often do — such as terminals, IDEs, and the internet. The startup says SWE-1 was trained using a new data model and a “training recipe that encapsulates incomplete states, long-running tasks, and multiple surfaces.”

The startup describes SWE-1 as its “initial proof of concept,” suggesting it may release more AI models in the future.